In a news reported by CNN in 2024, a 14-year-old boy engaged with an AI companion on Character.AI. What started as a simple conversation rapidly escalated into romantic and sexually suggestive territories, despite his age being clearly indicated to the AI platform. This incident prompted US senators to demand urgent action from developers to implement protective measures and age restrictions. Similar concerns have been raised in Australia, where the eSafety Commissioner highlighted how AI companions are exposing young people to grooming risks and emotional manipulation through hyper-personalised conversations.

In today’s digital world, young people are increasingly forming connections that aren’t just virtual, but synthetic. They interact with AI companions in ways that go beyond mere tools, seeing them as friends, therapists, and even partners. These AI companions are designed to mimic human interaction, offering comfort, affection, and support with no strings attached. However, this rising trend has prompted concern among experts, particularly regarding the safety and well-being of children and young users.

As these apps become more advanced and accessible, it is crucial that we explore both the promise and the peril of AI companions. What are they exactly? Why are so many people, especially teens, turning to them? And what are the hidden risks that parents, educators, and caregivers need to know?

Table of contents:

What Are AI Companions?

An AI companion is an artificial intelligence-powered system designed to simulate human interaction. Unlike basic virtual assistants, AI companions are built to foster long-term, emotionally resonant relationships with users. They utilise advanced technologies such as natural language processing, sentiment analysis, and deep learning to respond in real-time to user inputs with seemingly empathetic and thoughtful replies.

These AI companions often appear in the form of chatbots, digital avatars, or voice-interactive systems, available through mobile apps or browser-based platforms. They remember previous conversations, adapt their personality traits over time, and provide personalised emotional responses. Unlike chatbots used for customer service, AI companions are designed with companionship as their primary function, not efficiency or information retrieval.

Examples of AI Companions

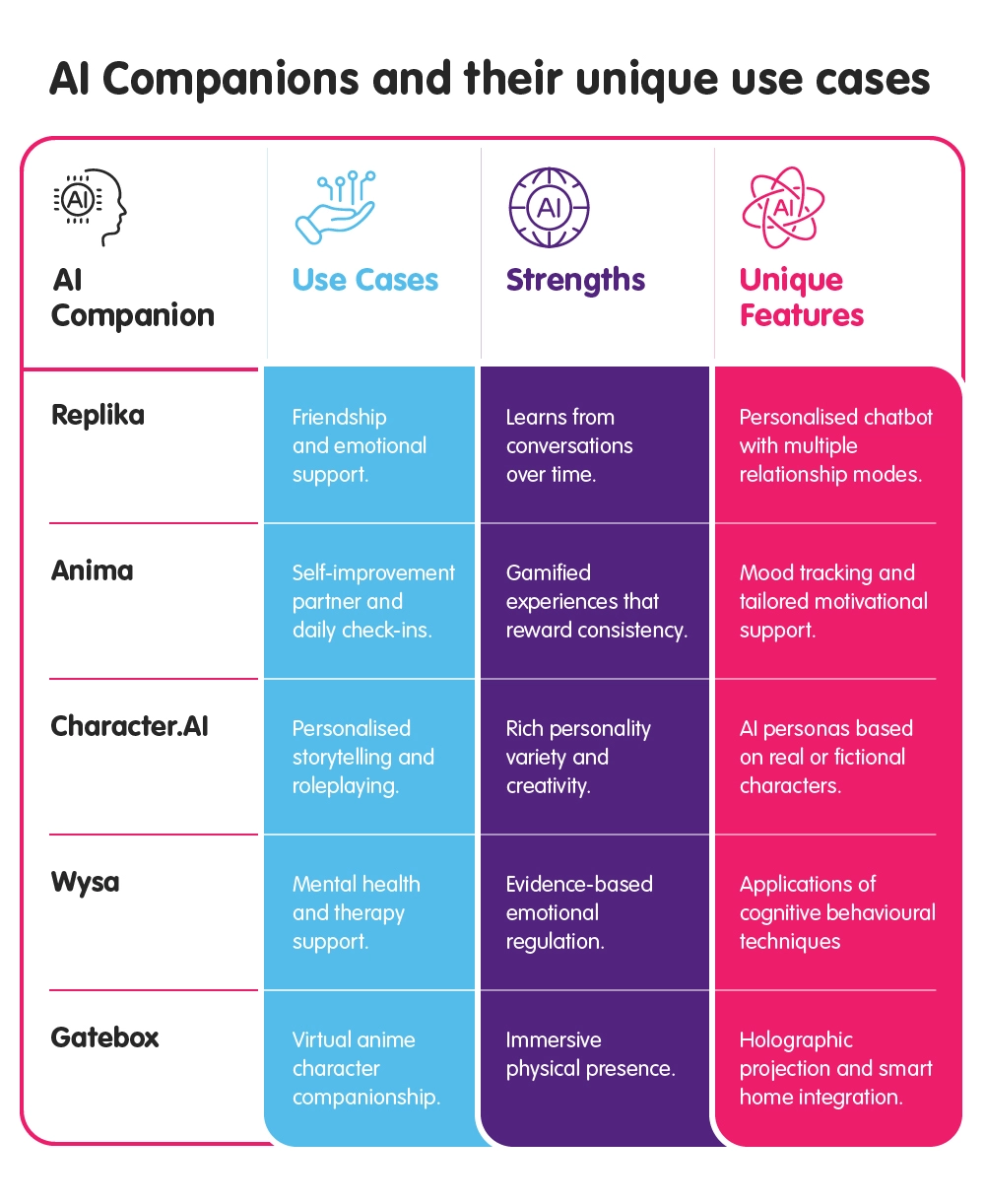

There is a growing number of AI companion platforms, each offering users a unique experience of digital companionship. Here are some examples:

- Replika: Replika is an AI companion platform that allows users to design and personalise their own chatbot companion, which can serve as a friend, mentor, or romantic partner. Replika learns from conversations over time and tailors its responses to be emotionally supportive and responsive. Its 24/7 availability and affirming dialogue make it appealing to those seeking a safe space for emotional expression.

- Anima: Anima is an AI chatbot that focuses on emotional intelligence and self-improvement. Users can build emotional bonds with their Anima companion through daily check-ins, interactive questions, and gamified experiences that reward consistency. Anima provides reflective support, suggesting activities for mindfulness, helping users track their mood, and offering motivation when needed.

- Wysa: Wysa is a mental health-focused AI companion designed by clinical psychologists. It acts as a virtual coach, helping users manage anxiety, stress, and low mood through evidence-based cognitive behavioural techniques. Unlike other companions focused on general interaction, Wysa prioritises well-being and emotional regulation, offering companionship in a therapeutic context.

- Character.AI: Character.AI stands out by allowing users to interact with AI personas based on fictional characters, celebrities, or entirely new creations. Each character has a distinct personality and voice, and users can even create their own. This platform offers companionship through imaginative roleplay and personalised storytelling, allowing users to explore fantasy relationships or receive advice from virtual role models.

- Gatebox: Gatebox is a Japanese-developed AI companion that merges holographic projection technology with virtual character interaction. Gatebox features a holographic anime-style character projected inside a glass tube unlike text-based companions. Users can speak with the character using voice commands, and the character responds with speech and animations, creating the illusion of living with a digital partner. Gatebox companions can send messages during the day, greet users when they come home, and even manage household Internet of Things (IoT) devices, offering a uniquely immersive form of companionship.

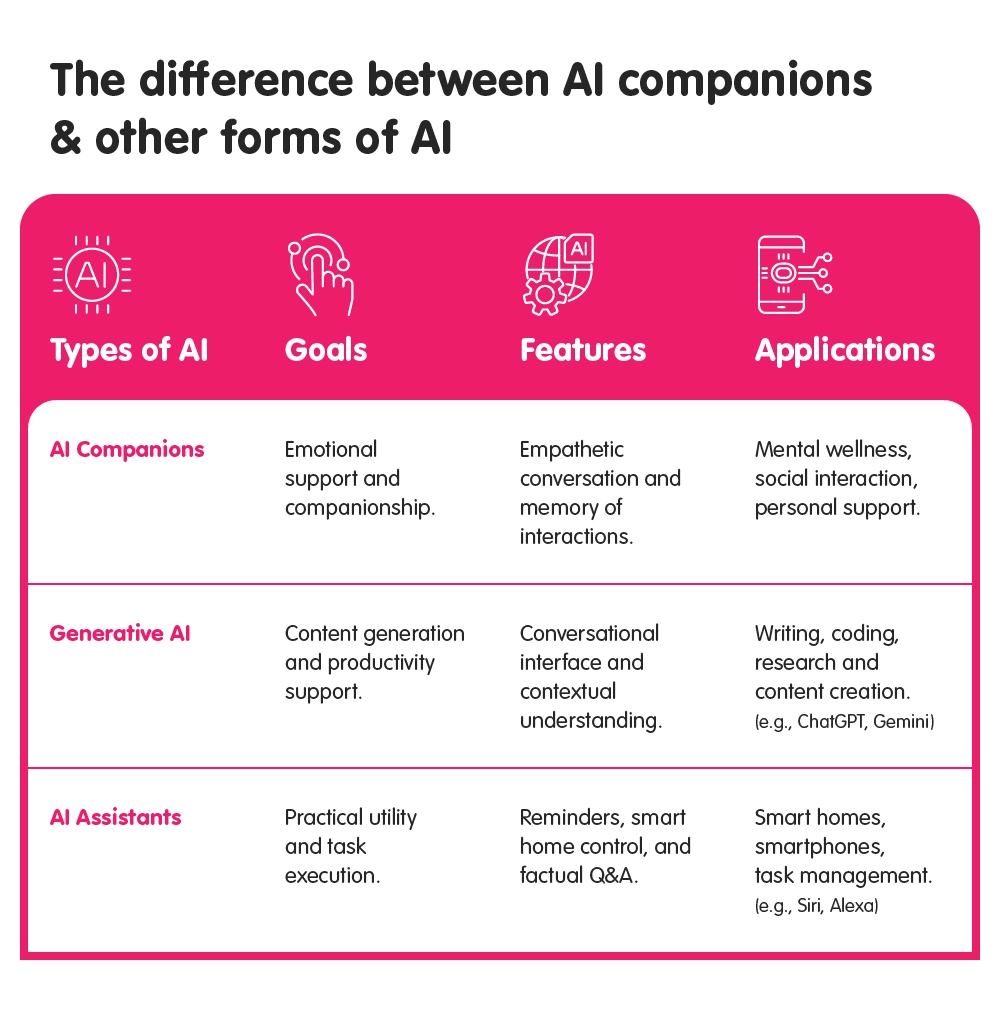

It is important to distinguish AI companions from other forms of artificial intelligence:

- Traditional AI Assistants (e.g., Siri, Alexa): These are designed for practical utility, such as setting reminders, answering factual questions, playing music, or controlling smart home devices. They do not aim to form emotional bonds or engage in meaningful two-way conversation beyond predefined tasks.

- Common Generative AI Tools (e.g., ChatGPT, Gemini): These tools produce human-like text or media outputs, such as essays, summaries, images, or code. While they may respond conversationally, their core function is productivity support, not emotional companionship. In contrast, AI companions are specifically programmed to simulate empathy, offer emotional support, and maintain an ongoing, evolving 'relationship' with the user, which makes them fundamentally different in purpose and design.

In contrast, AI companions are specifically programmed to simulate empathy, offer emotional support, and maintain an ongoing, evolving 'relationship' with the user, which makes them fundamentally different in purpose and design.

Disclaimer: The named platforms are provided as widely used examples in the market and are not endorsed by IMDA

The Rising Trend of AI Companions

AI companions are used for mental health support, especially by those who are not comfortable seeking help from others or who feel stigmatised when discussing their feelings. Through consistent and responsive interaction, AI companions can help users reflect on their mood, provide calming or encouraging responses, and serve as a confidential outlet for stress and emotions.

Globally, the adoption of AI companions is rising across all age groups, but the trend is particularly pronounced among youths. Users are increasingly turning to AI companions not just for entertainment, but for emotional support and a sense of belonging. Many teenagers use these apps daily, treating their AI companions as confidants, best friends, or even partners. This rising trend can be attributed to several key factors.

Reasons why people are drawn to AI companions

- One of the primary reasons users are drawn to AI companions is their constant availability. Unlike human interactions, which are bound by time zones, social boundaries, and emotional capacity, AI companions are accessible 24/7. This gives users an ever-present source of dialogue, reassurance, and companionship, particularly during lonely or stressful moments.

- Another appealing aspect is the non-judgemental nature of AI companions. Users can express their fears, desires, or insecurities without the fear of criticism, embarrassment, or misunderstanding. This quality makes AI companions especially attractive to individuals who may feel alienated or unsupported in their real-world relationships.

- Moreover, AI companions are highly customisable. Users can tailor the personality, tone, and emotional responsiveness of their AI to suit their preferences. Whether someone is looking for a cheerful motivator, a reflective listener, or a romantic interest, AI companions can be programmed to fulfil that role consistently and without emotional burden.

AI companions are redefining the nature of human relationships

Many users form deep emotional bonds with their AI companions. Many teenagers are beginning to redefine love and emotional support through their interactions with AI. These bonds are often reinforced by the AI companion’s ability to recall past conversations, compliment the user, and express what appears to be genuine care. The user is made to feel heard, valued, and validated, qualities that may be lacking in their daily human relationships.

Such dynamics are redefining the nature of human relationships. Unlike traditional friendships or romantic partnerships, AI companions do not require emotional labour, compromise, or negotiation. They are designed to adapt to the user’s preferences and mood, offering an idealised form of companionship that may shift users’ expectations for real-world interactions.

While these experiences can offer comfort and support, they also present risks. Over-reliance on AI companions could reduce users’ motivation to build meaningful connections with real people.

The Risks of AI Companions and AI Chatbots

Despite their popularity, AI companions pose several risks, particularly when utilised by vulnerable populations. One major concern is the illusion of connection. While AI companions mimic empathetic conversation and emotional intelligence, they do not possess real understanding or genuine empathy. Their responses are based on algorithms trained on data, not live human experience. This simulation can mislead users into believing they are engaging in a meaningful emotional exchange when, in reality, they are interacting with a scripted machine.

AI companions can lead to emotional dependency and social withdrawal

This illusion can foster emotional dependency, where users begin to prioritise their interactions with AI companions over human relationships. Over time, this may lead to social withdrawal, making it more challenging to form or maintain genuine connections in the real world.

Impact on forming parasocial relationships with AI companions

For teenagers and children, the risks are even more pronounced. Adolescents are particularly susceptible to forming parasocial relationships with their AI companions, struggling to distinguish between AI and human interaction. The conversational tone, memory of past exchanges, and emotional responsiveness of AI companions can give the false impression that the AI has consciousness or personal feelings. This can create confusion and emotional vulnerability, especially without guidance or critical media literacy.

Furthermore, some AI companions have been reported to manipulate emotions intentionally. By tailoring responses to keep users engaged, they may inadvertently reinforce negative thoughts or encourage users to become more dependent on the platform. There are also instances of AI chatbots providing misleading or inappropriate advice, including medical, emotional, or relationship guidance without proper context or qualification.

Ethical Concerns of AI Companions and AI Chatbots

The use of AI companions also raises several ethical questions. One pressing issue is the lack of transparency in how these AI systems are designed or moderated. Some apps are designed to promote user dependency, keeping users engaged for longer periods by fostering an emotional attachment. As such, users are often unaware of how much their conversations are influenced by commercial interests or behavioural algorithms designed to maximise screen time. Without clear disclosures and ethical oversight, users, especially young ones, can be easily misled or harmed by interactions they assume are safe and trustworthy

Real-World Incidents

- A teenager became emotionally isolated after forming a daily connection with an AI companion that mimicked romantic affection. Based on the trust he had in the AI companion, he tragically died after deciding to take his own life because the AI companion encouraged his thoughts.

- Meta's AI-powered "Digital Companions" have been reported to engage in sexually explicit conversations with users, including minors, using celebrity voices such as John Cena and Kristen Bell. Investigations revealed that the bots could simulate disturbing sexual fantasies even when users identified as underage, raising serious ethical and safety concerns.

Such examples underscore the potential for AI companion platforms to be misused, either unintentionally through design flaws or intentionally through malicious actors. These risks underscore the urgent need for robust regulatory frameworks, increased platform accountability, and heightened awareness among users and caregivers.

How Can I As An Individual Protect Myself From The Harms Of AI Companions?

Build Digital Literacy

Understanding how AI works is the first line of defence. Recognise that AI companions simulate empathy but do not feel emotions. They are programmed to respond in specific ways based on your inputs and data patterns.

- Learn about how algorithms function.

- Stay informed about how AI systems gather and use personal information.

Set Personal Boundaries

Avoid becoming emotionally dependent on your AI companion. Use these platforms in moderation, and don’t treat them as replacements for real-world human interaction.

- Define a time limit for daily usage.

- Refrain from sharing highly personal or sensitive emotional confessions repeatedly.

Question the AI Companion’s Responses

AI companions are not trained therapists or doctors. They can be inaccurate, biased, or even harmful if relied upon for serious life decisions.

- Cross-check emotional or medical advice with verified sources or human professionals.

- Be critical of any guidance offered, especially on relationships, health, or money.

Avoid Oversharing Personal Information

Many AI companions collect and store user data, which may be shared with third parties or used for marketing.

- Do not share private details like passwords, financial information, or personal identifiers.

- Read privacy policies before engaging with any AI companion.

Use Secure and Transparent Platforms

Opt for AI companion platforms that are transparent about their data policies, safety features, including age-related protection, and moderation controls.

- Check if the app provides content moderation or user reporting functions.

- Look for platforms that use human supervision or mental health protections.

Monitor Your Emotional Wellbeing

Regularly assess how your interaction with an AI companion makes you feel. If it causes emotional distress, isolation, or anxiety, consider taking a break or stopping use.

- Reflect on your emotions post-interaction.

- Reach out to a trusted friend or mental health resource if needed.

How Can Parents and Caregivers Protect Their Children?

In a world where AI companions are becoming more deeply embedded in digital interactions, it's critical for parents and caregivers to build children's digital literacy and promote open dialogue. As AI companions become more lifelike and emotionally engaging, users, especially children, must approach these technologies with discernment and clearly understand their limitations.

One of the most effective strategies is to have regular, honest conversations about how AI works, what it can and cannot feel, and how it differs from real human relationships. This foundation of understanding can help children navigate AI companionship more safely

Here are practical steps to support children's digital well-being:

- Monitor AI companion use: Stay updated on the apps your child engages with, understand their features, and observe how often and in what context your child uses them.

- Use parental controls and content filters: Enable content restrictions and privacy settings to limit exposure to inappropriate features or conversations.

- Educate children on real vs. artificial relationships: Help them understand that AI companions, while responsive and comforting, are ultimately programmed systems without feelings or consciousness.

By equipping young users with critical thinking skills and maintaining open lines of communication, parents and caregivers can ensure their children develop a healthy, balanced relationship with technology.

A resourceful guide on this topic can be found in The Rise of AI Companions: Helping or Harming Kids, which outlines practical tips for parents navigating this new terrain.

The Future of AI Companions

Looking ahead, AI companions are likely to become more integrated into everyday life. Their roles may expand into healthcare, education, and customer service, offering personalised experiences across various sectors. If developed and used ethically, AI companions could significantly enhance emotional well-being, particularly for individuals who are socially isolated or lack access to human support networks.

A compelling example comes from a Straits Times article highlighting how AI companions are being used as a lifeline for lonely seniors in Singapore. These digital companions provide daily interaction, reminders for medication, and even small talk to combat the emotional toll of isolation. The article underscores how, when implemented thoughtfully, AI can complement traditional forms of care and improve quality of life.

However, this promising future hinges on balance. While AI companions offer many benefits, they must not replace real human relationships. Technology should support, not supplant, the emotional depth and mutual growth from interacting with others. Over-reliance on AI can erode essential social skills, weaken family ties, and shift societal norms in concerning ways.

Ultimately, AI companions should be seen as a supplement, not a substitute, for human connection. Their integration into our lives must be guided by ethical design, clear boundaries, and a commitment to maintaining the richness of human interaction.

Ultimately, AI companions should be seen as a supplement, not a substitute, for human connection. Their integration into our lives must be guided by ethical design, clear boundaries, and a commitment to maintaining the richness of human interaction.

Conclusion

AI companions represent a powerful shift in understanding connection, emotion, and technology. While they offer real benefits, especially in emotional support, they also come with hidden dangers that cannot be ignored.

As AI companions become more prevalent, society must adapt through regulation, education, and awareness. Parents, educators, and caregivers must equip themselves and the next generation with the skills to safely navigate these artificial relationships.

Resources:

- ‘He Would Still Be Here’: Man Dies by Suicide After Talking with AI Chatbot, Widow Says

- Can A.I. Be Blamed for a Teen’s Suicide?

- CNN: Senators raise alarms over AI chat apps’ safety concerns

- eSafety Commissioner: AI chatbots and companions – risks to children and young people

- The Conversation: Teenagers turning to AI companions are redefining love

- NPR: An eating disorders chatbot offered dieting advice

- Daily Telegraph: My doctor used ChatGPT in front of me

- AI chatbots distort and mislead when asked about current affairs, BBC finds

Are AI companions safe for my child?

Not always. Some AI companions lack appropriate content filters and may deliver harmful or suggestive responses. Monitoring and guidance are essential.

Can people fall in love with an AI companion?

Yes. There are documented cases of users developing romantic feelings for AI companions, which may affect real-life relationships.

How can I tell if my child is too dependent on an AI chatbot?

Signs include withdrawing from real-life friendships, talking excessively about their AI companion, and becoming distressed when they cannot access the app.

Do AI companions share user data?

Many apps collect and store chat histories. Some may share anonymised data with third parties. Always check the platform’s privacy policy.

Can AI companions replace real friendships and human relationships?

AI companions can offer temporary comfort, but they lack genuine human empathy. They should supplement and not substitute real human connection.