Imagine watching a video of a trusted, authoritative political figure delivering a powerful and impactful speech, only to find out later that they never said a word of it. This isn't science fiction. It's the unsettling reality of deepfake technology, where artificial intelligence (AI) is used to create highly realistic yet fake videos, audio, and images.

In recent years, deepfake content has surged across the internet, raising alarms about truth, trust, and digital safety. What began as a fringe technology experiment has now evolved into a sophisticated tool with the power to manipulate public opinion, spread disinformation, and even possibly influence major events.

A notable example took place in Singapore in 2024, when deepfake videos of Senior Minister Lee Hsien Loong commenting on international relations and foreign leaders surfaced online. The deepfake videos showed Mr Lee apparently talking about US-China relations in the context of tensions in the South China Sea, as well as the two superpowers' relationship with the Philippines. The clips quickly gained traction, causing much confusion among citizens. However, the video was later confirmed to be fabricated using deepfake technology to mimic Mr. Lee’s voice and likeness. Mr. Lee warned the public of these deepfake videos, and cautioned, “Someone behind them wants to make it seem that these are views supported by me or the Singapore government. This is dangerous and potentially harmful to our national interests.”

This incident underscores the growing threat of deepfakes and why it’s more important than ever for the public to understand what they are, how they work, and how to spot them. Let's dive deeper.

Content Table:

What are Deepfakes or Deepfake Technology?

A deepfake is a piece of synthetic media created using artificial intelligence and machine learning. It can be a video, audio recording, or image in which a person appears to say or do something they never actually did.

Unlike simple video edits, deepfakes can replicate facial expressions, voice tones, gestures, and even emotional nuances with chilling accuracy. All it takes is training data, videos or audio clips of a person, and powerful software.

Origins and Meaning of the Term "Deepfake"

The name “deepfake” comes from “deep learning” (a subset of AI) and “fake.” The term was first coined by an anonymous Reddit user in 2017, who began sharing AI-generated manipulated videos. The name stuck and quickly became the standard label for media altered using AI.

Initially viewed as experimental fun, deepfake technology rapidly evolved through online forums and open-source communities. Today, anyone with a digital device and access to certain AI models can create a deepfake.

How Are Deepfakes Generated?

The Role of Artificial Intelligence (AI) and Machine Learning

At the core of deepfake technology lies artificial intelligence. More specifically, it uses machine learning to understand and replicate human behaviour.

To generate a deepfake, an AI model analyses real footage of a person to learn their facial movements, voice patterns, and speaking styles. With this training, the model can synthesise new content that mimics the person with remarkable similarity.

Learn more about generative AI and its capabilities.

Deep Learning and Generative Adversarial Networks (GANs)

Introducing GANs: The Brains Behind Deepfakes

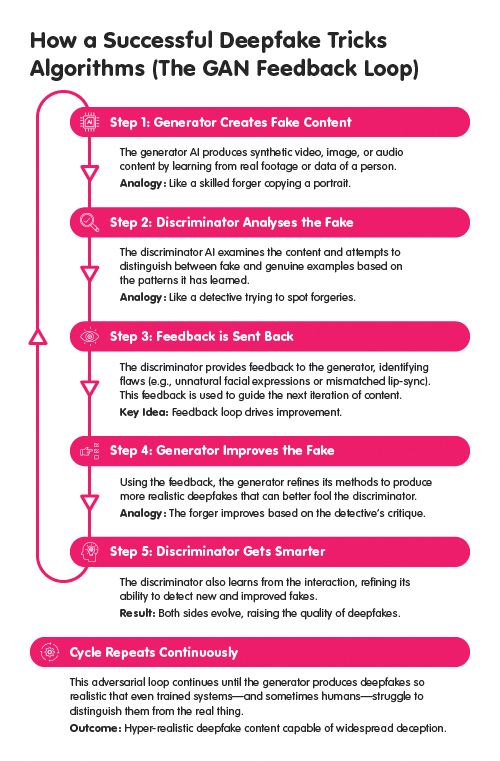

The most popular technique for creating deepfakes is called Generative Adversarial Networks (GANs). This system involves two AI networks working in tandem:

- Generator: Creates fake images or videos.

- Discriminator: Tries to detect if the media is fake or real.

How GANs Improve Deepfakes

As the generator produces deepfakes, the discriminator critiques them. Over time, the generator gets better at fooling the discriminator, resulting in highly convincing content.

To put it simply, GANs are like a forger and a detective playing an endless game, each learning from the other, leading to highly realistic fakes.

How Deepfakes Differs from Other Forms of Digital Manipulation

Traditional editing tools, such as Photoshop, require manual effort and often produce noticeable flaws. But deepfakes are autonomous. Once trained, the AI does the heavy lifting, making it far more advanced than basic photo or video alterations.

Deepfakes can mimic voices, sync lips with speech, and generate entirely new scenes. That’s what makes them both fascinating and frightening.

What Is Deepfake Technology Used For?

As deepfake technology evolves, its uses are rapidly expanding across multiple industries and platforms. From entertainment to education, and even criminal schemes, the applications of deepfakes are diverse and continually expanding.

Are All Deepfakes Malicious?

The term "deepfake" often carries negative connotations, but not all deepfakes are created with harmful intent. While the risks are significant, it's essential to understand that deepfake technology also has legitimate and beneficial applications in various fields.

Legitimate Uses of Deepfake Technology & Examples

Ethical applications of deepfake technology are emerging across industries:

Deepfake Technology in Entertainment and Film Industry

- De-ageing Actors: Deepfake algorithms are being used to digitally make actors appear younger, avoiding the need for excessive makeup or CGI. In the film Here (2024), Tom Hanks and Robin Wright were convincingly de-aged across several decades using AI-driven facial mapping.

- Recreating Deceased Actors: Filmmakers can now generate digital versions of late actors to reprise roles or appear in new productions. For example, deepfake technology was used to digitally recreate Peter Cushing’s likeness for the Star Wars film Rogue One.

Deepfake Technology in Education and Training

- Deepfake-powered lip synchronisation and voice cloning can assist in generating real-time translations, aiding individuals with hearing or language barriers.

- Virtual instructors created using deepfake tools can offer real-time explanations and custom-tailored responses, helping students visualise complex topics through lifelike interactions.

- Historical Reenactments: Educational platforms such as StoryFile and MyHeritage’s Deep Nostalgia are able to utilise deepfakes, such as building avatars of historical figures, to bring history lessons to life, allowing students to "meet" famous personalities like Abraham Lincoln or Cleopatra in interactive modules.

Deepfake Technology in Art

- Artists have utilised deepfake technology to create innovative artworks. Singaporean artist Charmaine Poh explores themes of identity and agency in her work, "GOOD MORNING YOUNG BODY." In this piece, Poh utilises deepfake technology to resurrect her 12-year-old self, a character named E-Ching from the early 2000s television series We Are R.E.M. By creating a deepfake avatar of her younger self, Poh addresses issues of objectification and harassment in digital spaces, offering a feminist critique of misogyny online. This work was showcased in the exhibition "Proof of Personhood" at the Singapore Art Museum.

Deepfake Technology in Business and Marketing

- Virtual Assistants for Marketing: Brands e.g. TikTok deploy AI-generated spokespersons or avatars that can speak in multiple languages and personalise product pitches based on consumer behaviour.

- Brand Avatars for Engagement: Companies use realistic digital personas to respond to queries or present product information, improving user experience while saving human resource costs.

These examples demonstrate how, when used responsibly, deepfake technology can enhance creativity and educational opportunities.

Malicious Uses of Deepfakes and Examples of Dangers

Unfortunately, the same tools used for innovation can also be weaponised:

Deepfake Technology Used For Spreading Misinformation

- Deepfakes have been utilised to generate fabricated political speeches, misrepresent public figures, or promote false narratives, influencing public opinion or inciting unrest. For example, deepfake videos of political figures have been used to spread disinformation, damaging reputations and impacting societal cohesion.

Deepfake Technology Used For Fraud

- Identity Theft and Financial Fraud: Scammers have utilised AI-driven deepfake technology to impersonate company executives in Singapore. They contact employees via unsolicited WhatsApp messages, claiming to be senior executives. Victims are then invited to live Zoom video calls where deepfake visuals of these executives instruct them to initiate substantial fund transfers under the guise of legitimate business transactions.

- Impersonation Attacks: Scammers have cloned the voices of loved ones or colleagues to deceive victims into disclosing sensitive information or sending money. These scams are becoming increasingly sophisticated, prompting official warnings from consumer protection agencies, including Cyber Security Agency of Singapore (CSA), Singapore Police Force (SPF) and Monetary Authority of Singapore (MAS).

Deepfake Technology Used For Non-consensual Content and Privacy Violations

- One of the most disturbing uses is creating explicit content involving real individuals without their consent. This abuse often targets women and has led to significant emotional and reputational harm.

These examples illustrate the broad functionality of deepfakes—they’re not confined to any one field, and the consequences of their use depend largely on the creator’s intent.

Legality of Deepfakes

The legality of deepfakes varies from jurisdiction to jurisdiction. In some countries, creating deepfakes is legal as long as they do not cause harm. However, when used for fraud, defamation, harassment, or electoral interference, they may breach criminal, privacy, or civil laws.

Singapore, for instance, has enacted laws such as the Protection from Online Falsehoods and Manipulation Act (POFMA) to combat misinformation, including AI-generated content. Globally, efforts are increasing to regulate the malicious use of deepfakes while supporting ethical innovation.

How to Spot Deepfakes?

As deepfake technology becomes more convincing, distinguishing between real and fake content is becoming increasingly difficult. While some deepfakes are nearly flawless, many still contain tell-tale signs that can help the observant viewer detect manipulation.

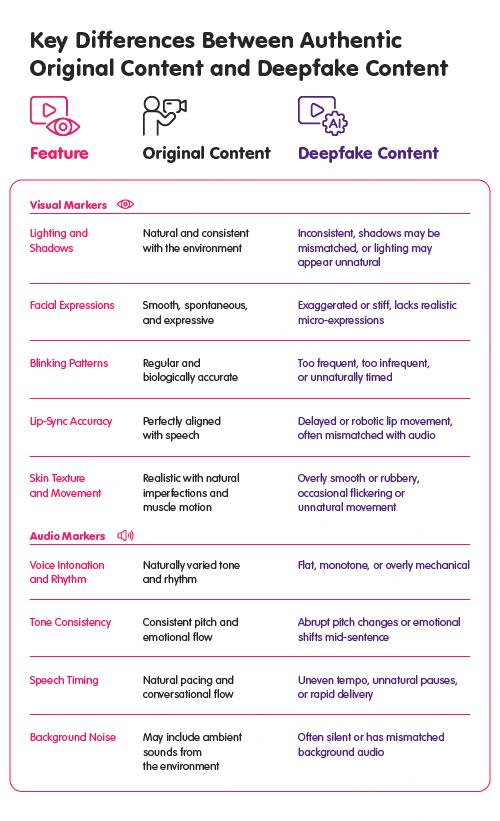

Below are key visual and audio cues, along with best practices to help verify the authenticity of suspicious media.

Visual Cues That Indicate Manipulated Content

Even highly sophisticated deepfakes often exhibit subtle flaws that can reveal their artificial nature:

- Inconsistent Lighting and Shadows: Deepfake videos may have unnatural or mismatched lighting, such as shadows falling in the wrong direction or appearing inconsistent between the face and surroundings. This occurs because AI struggles to perfectly simulate environmental lighting.

- Unnatural Facial Expressions and Blinking: Some deepfakes fail to replicate natural micro-expressions or blinking patterns. For example, blinking may appear too infrequent or overly mechanical, as early deepfake models often ignored eye movement dynamics.

- Lip-sync Errors in Videos: The mouth may not accurately match the spoken words. Lip movements can appear delayed, overly smooth, or robotic. Pay close attention to speech synchronisation—especially when audio and video appear to be out of sync.

Audio Cues of Deepfake Voices

Just like visuals, audio deepfakes can have glitches or artificial qualities:

- Robotic-sounding Speech: AI-generated voices often lack the natural rhythm and intonation of human speech. The output may sound flat or monotonous, particularly when expressing emotion.

- Sudden Tone Changes: Audio may suddenly jump in pitch or change emotional tone mid-sentence. These transitions can be abrupt and jarring, revealing the artificial nature of the synthesis.

Best Practices to Verify Authenticity

- Reverse Image Search: Take screenshots and use tools like Google Images or TinEye to find the original source of the media. If the same image appears in another context, it may be reused or manipulated. to find the original source of the media. If the same image appears in another context, it may be reused or manipulated.

- Check Hidden Information In The File: Examine the hidden details stored inside the photo or video file for anomalies. Some tools, such as FotoForensics or InVID, can analyse timestamps, GPS data, and editing history.

- Cross-Verify Content: Compare the video with official sources, such as news websites, government press releases, or verified social media accounts. If the content is genuine, it will likely be covered by multiple reputable sources.

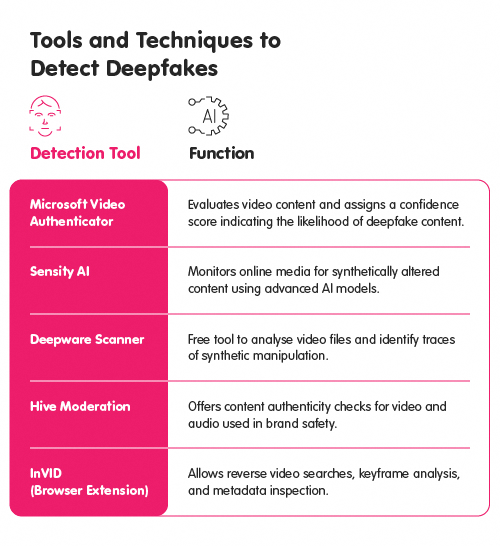

- Use Deepfake Detection Tools like apps or platforms that attempts discerning fake from real content (refer to section below on the tools)

Tools and Techniques to Detect Deepfakes

As deepfake detection continues to evolve, various tools and platforms have emerged to help verify the authenticity of digital content, including Microsoft Video Authenticator, Sensity AI, Deepware Scanner, Hive Moderation, and InVID (Browser Extension).

What to Do If You Encounter a Deepfake?

As deepfake technology becomes increasingly sophisticated, it's crucial to respond wisely when confronted with potentially manipulated media. Taking the right steps can help minimise harm, protect yourself and others, and limit the spread of disinformation.

-

Report Suspicious Content

If you suspect that a video or image has been altered using deepfake methods, report it immediately on the platform where it was posted. Most social media sites have mechanisms for reporting false or harmful content.

For more serious cases, such as scams, political disinformation, or non-consensual media, report the incident to your local authorities or cybercrime unit. In Singapore, you can refer such cases to the Cyber Security Agency (CSA) or IMDA’s anti-fake news platform under POFMA.

-

Educate Others

Digital literacy is key to combating the spread of deepfakes. Share what you know about deepfakes with your peers, especially how to recognise them and why verification matters. Encourage community groups to incorporate media awareness programmes.

The more people are aware of how deepfakes work, the less effective malicious content becomes.

-

Strengthen Digital Security

Protecting your digital footprint can reduce the chances of your image or voice being misused to create a deepfake:

- Avoid posting high-resolution videos or voice recordings publicly.

- Use strong passwords and enable two-factor authentication on your accounts.

- Regularly review and update your social media privacy settings.

Being proactive about cybersecurity reduces your vulnerability to impersonation or identity theft.

Conclusion

Deepfakes are more than just fake content. It’s a growing challenge that threatens the authenticity of everything we see and hear online. As deepfake technology continues to evolve, the responsibility lies with every individual to stay informed, practice media literacy, and exercise caution before trusting what we see. By learning how deepfakes work and how to detect them, we can protect ourselves and others from deception.

Resources

- SM Lee warns of 'malicious' deepfake videos of him commenting on foreign relations, leaders

- A Brief History of Deepfakes

- Spot the Signs of Deepfakes

- 'Amazing’ AI de-ages actor Tom Hanks in new film Here

- Speech to speech translation: Breaking language barriers in real-time

- Seeing the Sound: Multilingual Lip Sync for Real-Time Face-to-Face Translation

- AI enters Congress: Sexually explicit deepfakes target women lawmakers

- Scammers use AI to enhance their family emergency schemes

- Voice cloning scams are a growing threat. Here's how you can protect yourself

- Deepfake technology in today’s world: Opportunities and challenges

- A Voice Deepfake Was Used To Scam A CEO Out Of $243,000

- Un'emozione per sempre 2.0: starring Ornella Muti

- Protection from Online Falsehoods and Manipulation Act

- Don’t fall for deepfakes this election

- AI deepfakes a top concern for election officials with voting underway

- Election deepfakes could undermine institutional credibility, Moody’s warns

- The Influence of Deep Fakes on Elections

- StoryFile Debuts Innovative AI Video Experience at The National WWII Museum

- Proof of Personhood

- What is deepfake technology?

- What is a generative adversarial network (GAN)?

- Meet Symphony Avatars: TikTok's New Creative AI Tool